[ad_1]

Wrongful arrests, an growing surveillance dragnet, defamation and deep-faux pornography are all really existing dangers of so-known as “artificial intelligence” instruments at this time on the market place. That, and not the imagined probable to wipe out humanity, is the real menace from artificial intelligence.

Beneath the buzz from several AI companies, their engineering currently allows plan discrimination in housing, legal justice and wellbeing treatment, as properly as the distribute of dislike speech and misinformation in non-English languages. Presently, algorithmic management courses issue staff to operate-of-the-mill wage theft, and these packages are getting to be far more common.

Nevertheless, in May well the nonprofit Middle for AI safety introduced a statement—co-signed by hundreds of business leaders, like OpenAI’s CEO Sam Altman—warning of “the chance of extinction from AI,” which it asserted was akin to nuclear war and pandemics. Altman had previously alluded to these a threat in a Congressional hearing, suggesting that generative AI instruments could go “quite mistaken.” And in July executives from AI providers achieved with President Joe Biden and built numerous toothless voluntary commitments to curtail “the most major resources of AI pitfalls,” hinting at existential threats more than serious kinds. Corporate AI labs justify this posturing with pseudoscientific study reviews that misdirect regulatory attention to these types of imaginary scenarios utilizing worry-mongering terminology, these kinds of as “existential threat.”

The broader community and regulatory organizations will have to not drop for this science-fiction maneuver. Rather we must glance to scholars and activists who apply peer evaluate and have pushed back again on AI hoopla in purchase to realize its harmful results below and now.

Mainly because the time period “AI” is ambiguous, it will make owning crystal clear conversations much more challenging. In just one sense, it is the name of a subfield of computer system science. In another, it can refer to the computing approaches formulated in that subfield, most of which are now focused on pattern matching dependent on substantial data sets and the technology of new media based mostly on those patterns. Finally, in internet marketing copy and start off-up pitch decks, the expression “AI” serves as magic fairy dust that will supercharge your company.

With OpenAI’s release of ChatGPT (and Microsoft’s incorporation of the device into its Bing lookup) late very last year, textual content synthesis devices have emerged as the most outstanding AI techniques. Large language designs this kind of as ChatGPT extrude remarkably fluent and coherent-seeming text but have no knowing of what the textual content implies, permit by itself the potential to explanation. (To counsel so is to impute comprehension in which there is none, one thing carried out purely on faith by AI boosters.) These methods are as a substitute the equivalent of great Magic 8 Balls that we can enjoy with by framing the prompts we send out them as queries such that we can make sense of their output as answers.

Regretably, that output can seem so plausible that without having a clear indicator of its artificial origins, it gets to be a noxious and insidious pollutant of our details ecosystem. Not only do we threat mistaking synthetic textual content for responsible information and facts, but also that noninformation displays and amplifies the biases encoded in its teaching data—in this case, every kind of bigotry exhibited on the Web. What’s more the artificial text seems authoritative despite its deficiency of citations again to serious resources. The for a longer period this artificial textual content spill continues, the even worse off we are, because it will get tougher to locate reputable resources and more challenging to rely on them when we do.

Yet, the folks providing this technology suggest that text synthesis equipment could deal with many holes in our social fabric: the deficiency of teachers in K–12 training, the inaccessibility of wellness treatment for very low-revenue persons and the dearth of authorized assist for persons who are unable to manage legal professionals, just to name a couple of.

In addition to not actually supporting all those in want, deployment of this technological innovation in fact hurts personnel: the systems count on great amounts of education details that are stolen without compensation from the artists and authors who created it in the initial area.

Next, the activity of labeling details to create “guardrails” that are supposed to avoid an AI system’s most toxic output from seeping out is repetitive and often traumatic labor carried out by gig staff and contractors, people today locked in a world-wide race to the base for shell out and doing the job circumstances.

Lastly, businesses are hunting to minimize expenses by leveraging automation, laying off people from beforehand steady work opportunities and then using the services of them again as decreased-compensated employees to appropriate the output of the automatic devices. This can be noticed most clearly in the present-day actors’ and writers’ strikes in Hollywood, where grotesquely overpaid moguls plan to acquire eternal rights to use AI replacements of actors for the price of a day’s operate and, on a gig basis, seek the services of writers piecemeal to revise the incoherent scripts churned out by AI.

AI-similar plan will have to be science-driven and developed on suitable investigation, but as well many AI publications come from corporate labs or from academic teams that acquire disproportionate market funding. Considerably is junk science—it is nonreproducible, hides powering trade secrecy, is full of hoopla and uses evaluation strategies that deficiency construct validity (the residence that a take a look at measures what it purports to evaluate).

Some recent exceptional examples include a 155-page preprint paper entitled “Sparks of Synthetic Common Intelligence: Early Experiments with GPT-4” from Microsoft Research—which purports to find “intelligence” in the output of GPT-4, one of OpenAI’s textual content synthesis machines—and OpenAI’s own specialized reviews on GPT-4—which claim, amongst other matters, that OpenAI devices have the capability to resolve new issues that are not observed in their instruction knowledge.

No one can exam these statements, nevertheless, because OpenAI refuses to provide accessibility to, or even a description of, those people facts. In the meantime “AI doomers,” who attempt to concentration the world’s focus on the fantasy of all-impressive equipment probably likely rogue and destroying all of humanity, cite this junk somewhat than exploration on the real harms companies are perpetrating in the real globe in the identify of generating AI.

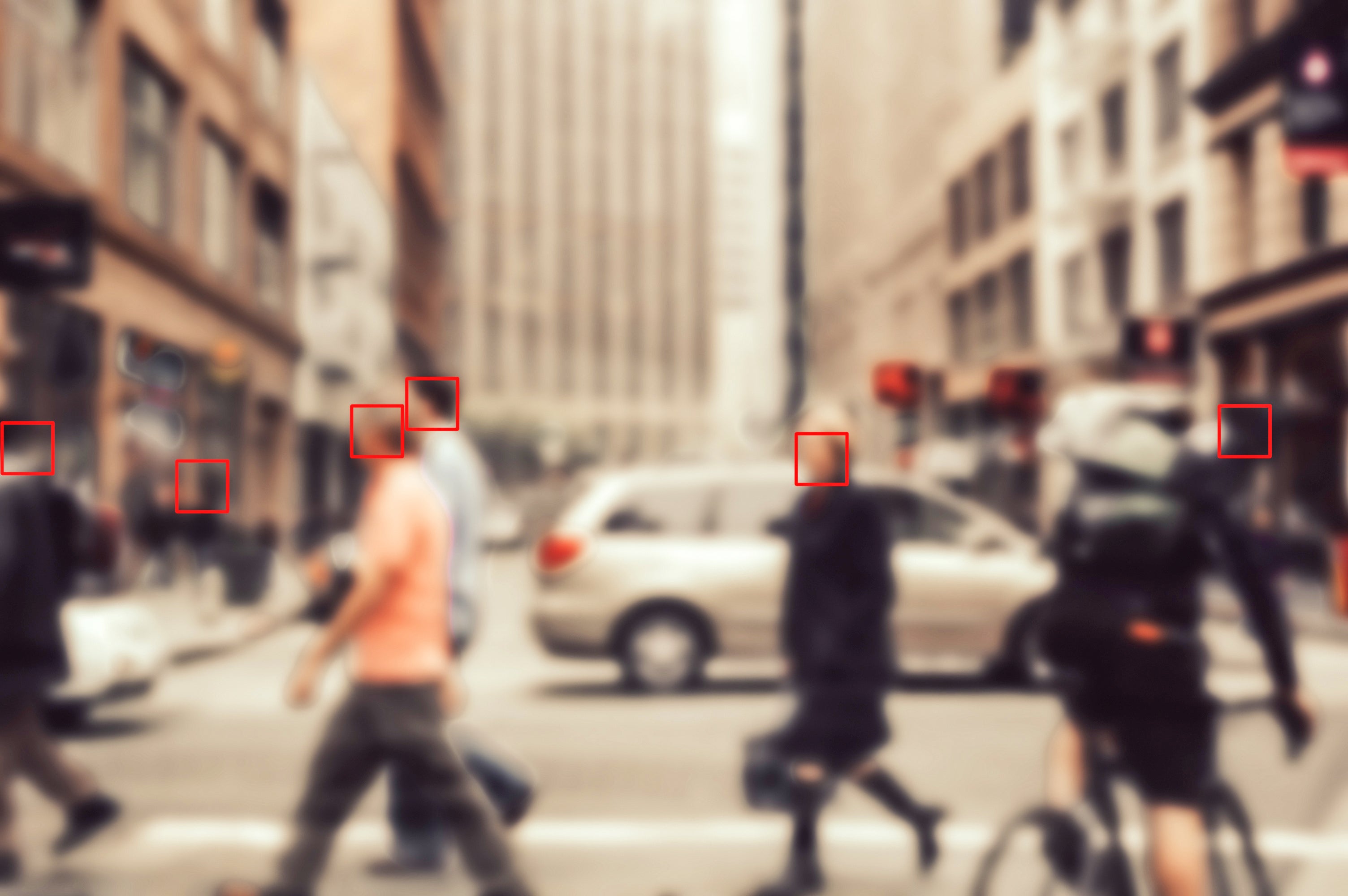

We urge policymakers to as an alternative attract on reliable scholarship that investigates the harms and dangers of AI—and the harms induced by delegating authority to automated methods, which consist of the unregulated accumulation of facts and computing energy, weather expenditures of design teaching and inference, damage to the welfare state and the disempowerment of the very poor, as perfectly as the intensification of policing in opposition to Black and Indigenous households. Sound investigation in this domain—including social science and principle building—and sound plan based on that analysis will continue to keep the emphasis on the people hurt by this know-how.

This is an viewpoint and examination post, and the views expressed by the creator or authors are not necessarily all those of Scientific American.

[ad_2]

Source hyperlink